The definition of ‘Artificial Intelligence’ in SB 1047 was actually meant for systems, not models

Summary: I was amazed earlier today when I discovered that one of the most important pieces of proposed legislation in the world is using a key definition that was designed for a totally different situation – and that it turns out that my earlier recommendations for this legislation happen to be in complete harmony with the context of the original definition. I hope that now that we have these key issues out in the open, we can for the first time tackle the truely challenging moral and social issues at the heart of AI regulation.

Unearthing the source of the definition problem

I recently studied California’s proposed SB 1047 bill, and discovered that the critical definition in the bill, of “artificial intelligence model”, was very nearly excellent, but needed one critical change:

“The current definition of ‘artificial intelligence model’ in SB 1047 is a good one, and doesn’t need many changes. Simply renaming it to “artificial intelligence system”, and then changing the name and definition of “covered model” to “covered system” would go a long way.”

Without this change, I discovered that the bill does not actually cover any of the largest current or likely future large models. I just learned how this happened.

This morning I got some critical information from the Privacy Committee in CA, about a bill called AB 2885. The purpose of this bill is to define the term ”artificial intelligence” in a consistent way across a number of pieces of Californian legislation. They explained to me that this bill is actually the source of the definition in SB 1047. It states:

“This bill would define the term ”artificial intelligence” for the purposes of the above-described provisions to mean an engineered or machine-based system that varies in its level of autonomy and that can, for explicit or implicit objectives, infer from the input it receives how to generate outputs that can influence physical or virtual environments.”

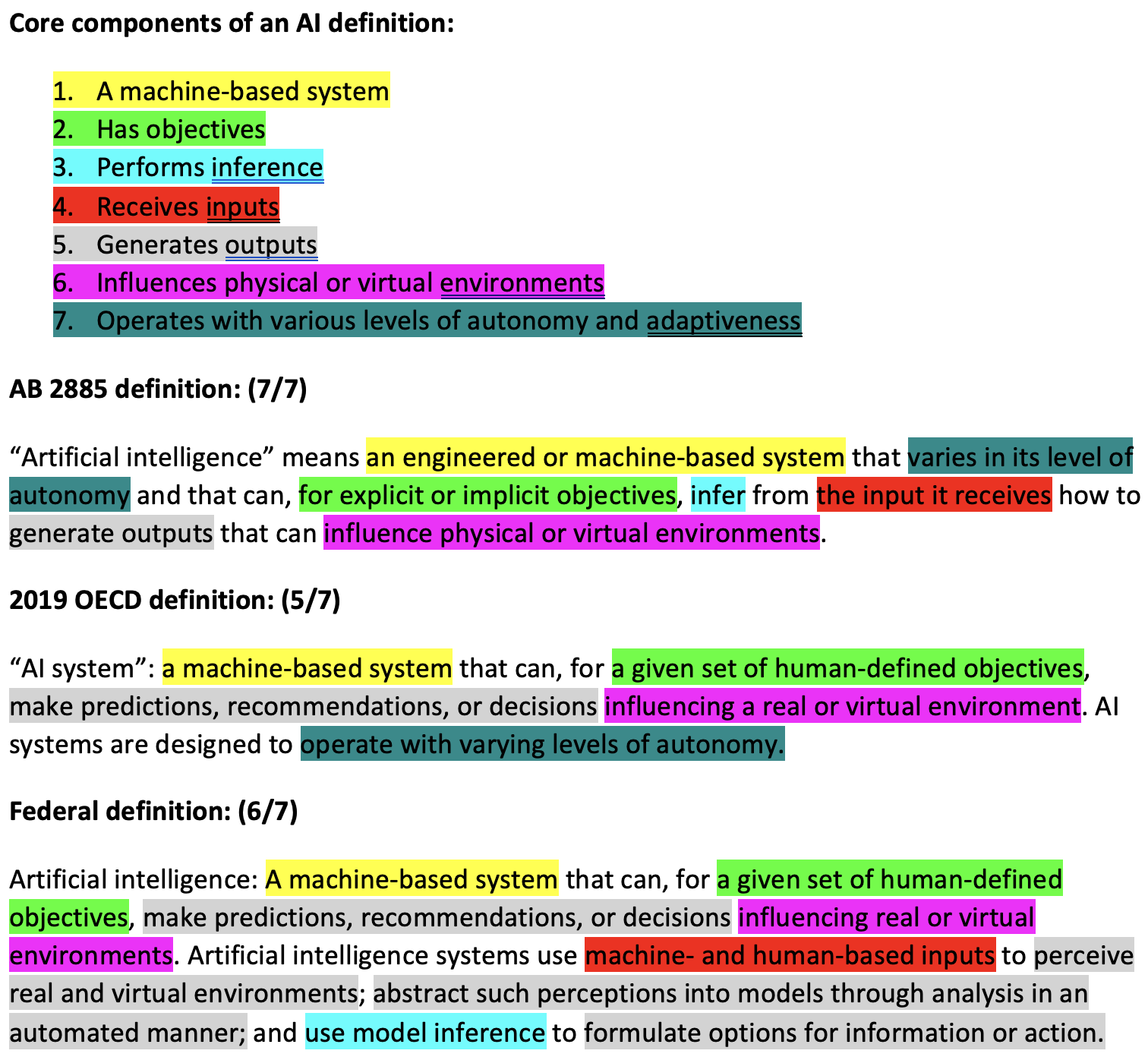

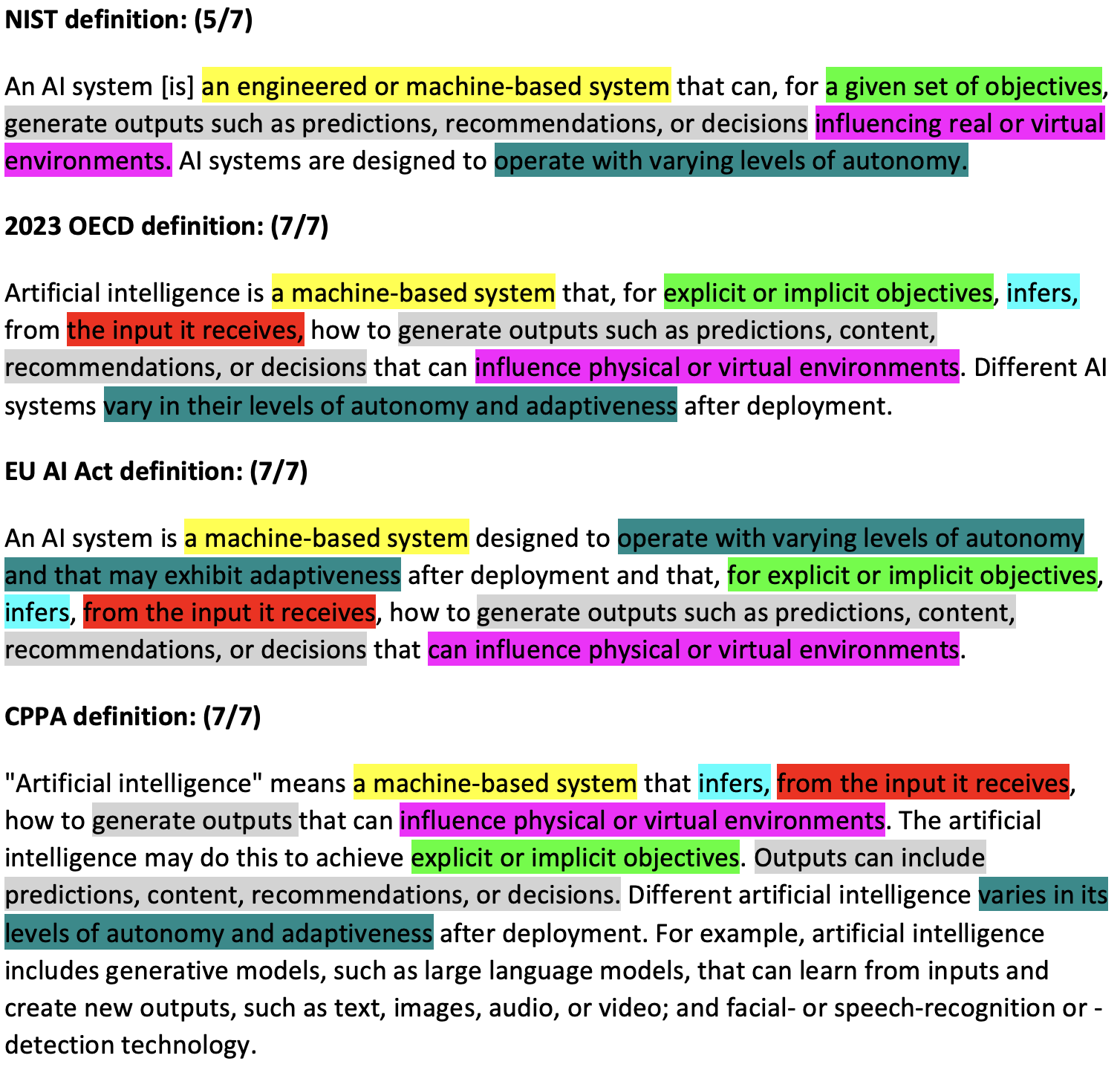

The policy committee also sent me this fascinating new information on where the definition comes from – it was created by combining the key ideas from other AI definitions in the US and around the world (click to view full size):

You can see from this process why the current definition of ‘artificial intelligence’ in SB 1047 is a good one, but you can also see why we have a major problem: every single example used as the basis for the definition specifically covers a system; not a single one covers a model. In fact, the explainer uses this as the first highlighted “core component”: “a machine based system”. So this definition is being used for something it was never designed for, and as a result it doesn’t work at all.

The definition of “Artificial Intelligence” in SB 1047 was designed to cover a system, but SB 1047 uses it to covers a model.

So now this all makes sense. My earlier analysis of SB 1047 pointed out that it would work well as a bill to cover systems, but not models. At the time, I didn’t know this background as to where the definition actually came from – now that we know, we can see exactly why SB 1047’s definition works so well with that single change: “model” to “system”.

We have to make a tough decision

This minor language is a major change to meaning. It does make the bill compatible with Senator Scott Wiener’s stated goal of the bill: “We deliberately crafted this aspect of the bill in a way that ensures that open source developers are able to comply.” But here is a key premise, which I don’t think anyone in the technical AI community disagrees with: no one can ever reasonably guarantee the safety of a model that has not yet been embedded in a system.

No one can ever reasonably guarantee the safety of a model that has not yet been embedded in a system.

The fact that this is true is really (really!) unfortunate. But it is not, as far as I’m aware, up for debate – it’s just a basic mathematical necessity that we have to deal with. If this fact wasn’t true, then regulating AI would be much easier: if you can require a reasonable guarantee of safety before someone can release a model, and it’s possible to comply with that requirement, then we prevent harms resulting from the use of the model by both good and bad actors.

But that’s not possible. If we change to covering systems instead of models, then we shift the requirement of ensuring safety to those actually using a model. That would not stop a bad actor from intentionally causing harm with the model. Instead, it would only stop those trying to do the right thing from accidentally causing harm.

At present, I haven’t heard any politician or bill sponsor explicitly accepting or tackling this necessary compromise: you can either have a higher level of control through the increased centralization of effectively banning large model release, so direct model access is only available to the employees of big tech companies; or you can have increased accessibility of AI technology through allowing model release, but in the process increasing both the number of “good guys” and “bad guys” that can fully utilise the technology.

This is a values issue

I saw this coming over a year ago – the fundamental problem of control vs access was clearly going to be the key issue of AI policy. Therefore I spent months studying this issue and talking to dozens of experts, and wrote a long analysis titled AI Safety and the Age of Dislightenment. In it, I claimed that we have to directly tackle the key question that was tackled at the dawn of The Enlightenment: do we want to concentrate power in a few hands, or do we want to distribute it widely?

AI could be the greatest source of power in history. So deciding how we want to distribute or concentrate that power could turn out to be the most significant decision we make in history.

I’m not even going to state my opinion about this issue here, because it really doesn’t matter (and in my earlier article above I made it pretty clear already!) It’s a big question, and will have big consequences for us all. SB 1047 is just one little bill in one state in one country – but it could be enormously significant: it’s the home of Meta, the creator of the strongest open source models outside of China, and of NVIDIA, which provides the hardware nearly all large models are trained with, and of Google and OpenAI, two of the top providers of commercial models. And of course it’s also the home of thousands of founders trying to create the next generation of AI systems.

What happens in California with this one bill really could change the world. I hope those voting on it do so with a full understanding of its meaning and implications.